Coordination Patterns for Distributed Rate Limiting in Multi-Carrier Integration: Preventing Race Conditions Without Sacrificing Performance

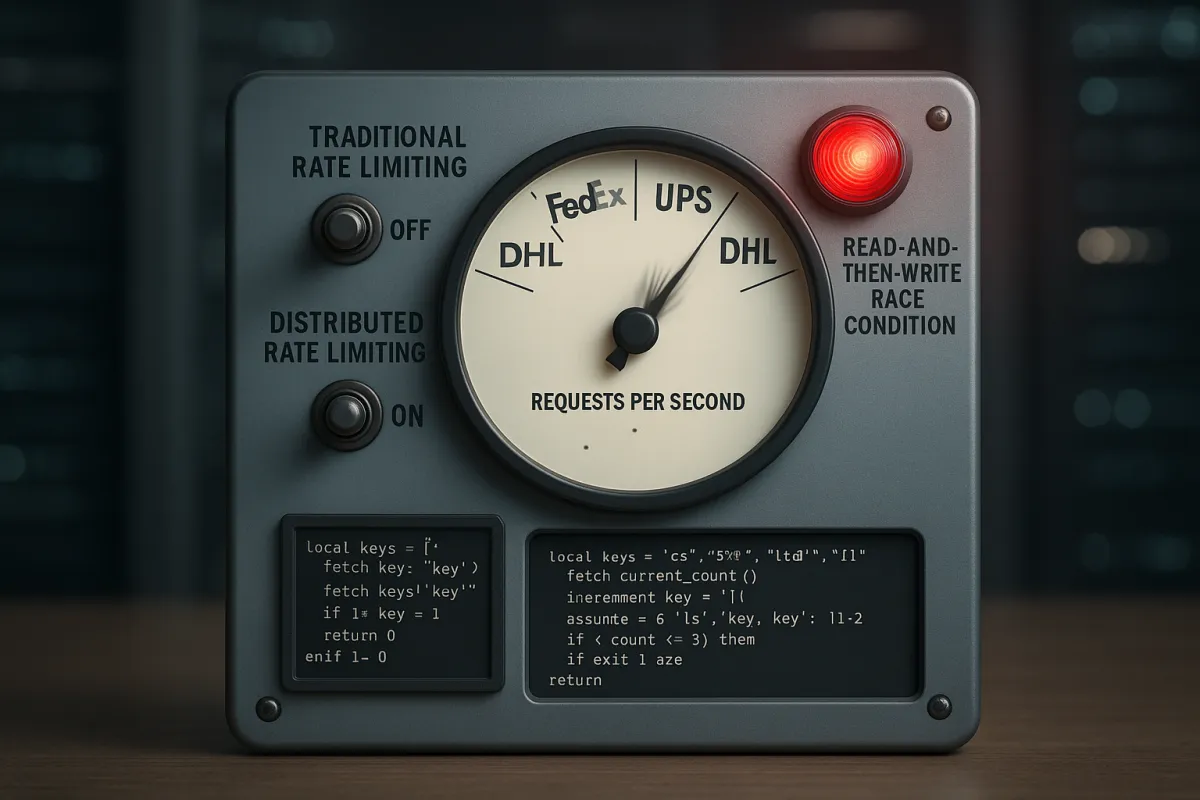

When your multi-carrier integration platform starts handling thousands of requests per second across FedEx, UPS, DHL, and regional carriers, traditional rate limiting breaks down. The culprit? In a distributed environment, the "read-and-then-write" behavior creates a race condition, which means the rate limiter can at times be too lenient. If only a single token remains and two servers' Redis operations interleave, both requests would be let through. The result is blown rate limits, angry carriers, and that familiar email about API access suspension.

Most teams discover this problem the hard way. You've built what seems like a solid token bucket implementation, tested it locally, and deployed across multiple servers. Everything works perfectly until peak shipping season arrives. Suddenly, your FedEx integration is making 150 requests per minute instead of the allowed 100, and your UPS connection starts getting throttled responses.

The Hidden Race Condition in Carrier Integration Rate Limiting

The fundamental problem lies in how distributed rate limiters traditionally work. If you've ever built rate limiting or login throttling with Redis, chances are you've used INCR and EXPIRE. But if you're doing them in two separate steps, you might be in for a surprise—especially under high load. This setup breaks under concurrency.

Consider this typical implementation:

const count = await redis.incr(`fedex:${apiKey}`)

if (count === 1) {

await redis.expire(`fedex:${apiKey}`, 60)

}

return count <= RATE_LIMIT

Sound familiar? This code has a critical flaw. If two requests from the same IP fail at the same time, both of them run this logic in parallel against the same key. The race is about concurrent access to the same user/IP key.

In carrier integration systems, this becomes particularly problematic because:

- Rate shopping requests often burst in clusters when customers check shipping options

- Label generation workflows can trigger multiple API calls in rapid succession

- Webhook processing can create sudden spikes in outbound API requests

- Multi-tenant platforms like Cargoson, nShift, EasyPost, and ShipEngine amplify these patterns across hundreds of shippers

The business impact is real. API downtime increased from 34 minutes in Q1 2024 to 55 minutes in Q1 2025, nearly 20% of webhook event deliveries fail silently during peak loads, and financial services and telecom sectors faced 40,000+ API incidents in H1 2025.

Redis Lua Scripts: Moving Logic into Atomic Operations

The solution is straightforward but requires a fundamental shift in approach. The solution is to move the entire read-calculate-update logic into a single atomic operation. With Redis, this can be achieved using something called Lua scripting.

Lua scripts allow us to execute multiple Redis commands as a single atomic transaction. This ensures that multiple clients cannot interfere with each other while accessing shared resources. Lua scripts allow us to execute multiple Redis commands as a single atomic transaction. This ensures that multiple clients cannot interfere with each other while accessing shared resources.

Here's how to implement atomic rate limiting for carrier APIs:

local rateLimitScript = `

local key = KEYS[1]

local limit = tonumber(ARGV[1])

local window = tonumber(ARGV[2])

local current = redis.call("INCR", key)

if current == 1 then

redis.call("EXPIRE", key, window)

end

if current > limit then

return 0 -- Rate limit exceeded

else

return 1 -- Request allowed

end

`

// Usage for different carriers

const fedexAllowed = await redis.eval(rateLimitScript, 1,

`fedex:rate_limit:${apiKey}`, 100, 60)

const upsAllowed = await redis.eval(rateLimitScript, 1,

`ups:rate_limit:${userId}`, 200, 60)

All of this happens as one atomic command. No other Redis client can sneak in during this execution. Problem solved. The entire increment-and-check operation becomes indivisible, eliminating the race condition that plagues distributed token bucket implementations.

For more complex scenarios like token bucket refills, you can implement the entire algorithm in Lua:

local tokenBucketScript = `

local key = KEYS[1]

local capacity = tonumber(ARGV[1])

local refillRate = tonumber(ARGV[2])

local requestedTokens = tonumber(ARGV[3])

local now = tonumber(ARGV[4])

local bucket = redis.call("HMGET", key, "tokens", "lastRefill")

local tokens = tonumber(bucket[1]) or capacity

local lastRefill = tonumber(bucket[2]) or now

-- Calculate tokens to add

local timePassed = now - lastRefill

local tokensToAdd = math.floor(timePassed * refillRate / 1000)

tokens = math.min(capacity, tokens + tokensToAdd)

if tokens >= requestedTokens then

tokens = tokens - requestedTokens

redis.call("HMSET", key, "tokens", tokens, "lastRefill", now)

redis.call("EXPIRE", key, 3600)

return 1

else

redis.call("HMSET", key, "tokens", tokens, "lastRefill", now)

redis.call("EXPIRE", key, 3600)

return 0

end

`

This approach handles the full token bucket lifecycle atomically, including refill calculations based on elapsed time.

Sharding Strategies for Multi-Carrier Environments

Once you've solved the atomicity problem, the next challenge is distribution. We need to shard consistently so that all of a client's requests always hit the same Redis instance. If user "alice" sometimes hits Redis shard 1 and sometimes hits shard 2, her rate limiting state gets split and becomes useless. We need a distribution algorithm like consistent hashing to solve this.

For carrier integrations, your sharding strategy depends on your rate limiting scope:

- Per-User Limits: Hash user IDs to ensure all requests from the same user hit the same shard

- Per-API-Key Limits: Hash API keys for B2B integrations where each client has their own carrier credentials

- Per-Tenant Limits: In platforms like Cargoson, EasyPost, or nShift, hash tenant IDs to isolate multi-tenant usage

- Global Limits: Use a single key for system-wide limits (requires careful hot key management)

Here's a practical sharding implementation:

import crypto from 'crypto'

class CarrierRateLimiter {

constructor(redisCluster) {

this.redis = redisCluster

this.shards = 8 // Number of Redis instances

}

getShardKey(identifier) {

const hash = crypto.createHash('sha256')

.update(identifier).digest('hex')

const shard = parseInt(hash.slice(0, 8), 16) % this.shards

return `shard_${shard}`

}

async checkLimit(carrier, identifier, limit, window) {

const shardKey = this.getShardKey(identifier)

const rateLimitKey = `${carrier}:${identifier}`

return this.redis[shardKey].eval(

rateLimitScript, 1, rateLimitKey, limit, window

)

}

}

// Usage

const limiter = new CarrierRateLimiter(redisCluster)

const fedexAllowed = await limiter.checkLimit('fedex', userId, 100, 60)

const upsAllowed = await limiter.checkLimit('ups', userId, 200, 60)

Mitigation strategies include sharding Redis instances, implementing local in-memory quotas, or moving to high-performance distributed stores. Mitigation strategies include sharding Redis instances, implementing local in-memory quotas, or moving to high-performance distributed stores. But sharding isn't without challenges. Using consistent hashing to distribute rate limiting data reduces hotspots but introduces its own challenges during scaling events: rehashing during node addition/removal causes state redistribution, which can temporarily destabilize limits enforcement during incidents. Using consistent hashing to distribute rate limiting data reduces hotspots but introduces its own challenges during scaling events: rehashing during node addition/removal causes state redistribution, which can temporarily destabilize limits enforcement during incidents.

Carrier-Specific Sharding Considerations

Different carriers have different rate limiting characteristics that affect your sharding strategy:

- FedEx: Per-meter limits with complex tiered pricing—consider sharding by account number rather than user ID

- UPS: Per-access-key limits with burst allowances—shard by the specific UPS access key being used

- DHL: Geographic rate limits—include region in your sharding key

- Regional carriers: Often have very low limits—might need dedicated shards to prevent interference

Beyond Token Buckets: Advanced Coordination Patterns

Token bucket has a problem: boundary issues. Fire 100 requests at 11:59:59, then 100 more at 12:00:01, and you've "technically" stayed within limits but hammered the system with 200 requests in 2 seconds. Sliding window fixes this by tracking requests in overlapping time windows.

For carrier integrations that need stricter flow control, sliding window algorithms provide better distribution:

local slidingWindowScript = `

local key = KEYS[1]

local limit = tonumber(ARGV[1])

local window = tonumber(ARGV[2])

local now = tonumber(ARGV[3])

-- Remove old entries beyond the window

redis.call("ZREMRANGEBYSCORE", key, 0, now - window * 1000)

-- Count current entries

local current = redis.call("ZCARD", key)

if current < limit then

-- Add current request

redis.call("ZADD", key, now, now .. ":" .. math.random())

redis.call("EXPIRE", key, window + 1)

return 1

else

return 0

end

`

This approach uses Redis sorted sets to maintain a precise sliding window, though it requires more memory per key. For high-volume carrier integrations, you might prefer a sliding window counter approximation that uses less memory:

local slidingCounterScript = `

local key = KEYS[1]

local limit = tonumber(ARGV[1])

local window = tonumber(ARGV[2])

local now = tonumber(ARGV[3])

local currentWindow = math.floor(now / (window * 1000))

local previousWindow = currentWindow - 1

local currentKey = key .. ":" .. currentWindow

local previousKey = key .. ":" .. previousWindow

local currentCount = tonumber(redis.call("GET", currentKey)) or 0

local previousCount = tonumber(redis.call("GET", previousKey)) or 0

-- Weight the previous window based on overlap

local overlapWeight = (now % (window * 1000)) / (window * 1000)

local estimatedCount = math.floor(previousCount * (1 - overlapWeight) + currentCount)

if estimatedCount < limit then

redis.call("INCR", currentKey)

redis.call("EXPIRE", currentKey, window * 2)

return 1

else

return 0

end

`

Adaptive Rate Limiting: Learning from Carrier Behaviour

Static rate limits often don't reflect real carrier capacity. This allows you to create dynamic rate limiting rules based on factors like user behavior, geographic location, or historical usage. Monitoring user activity - such as request patterns and error rates - enables you to adjust limits in real time.

Modern carrier integration platforms can achieve significant performance improvements through adaptive coordination. Dynamic rate limiting shows promising solutions, with the potential to cut server load by up to 40% during peak times while maintaining availability.

Here's how to implement adaptive rate limiting that learns from carrier response patterns:

class AdaptiveCarrierLimiter {

constructor(redis) {

this.redis = redis

this.carrierMetrics = new Map()

}

async adjustLimits(carrier, responses) {

const key = `${carrier}:adaptive_metrics`

const pipeline = this.redis.pipeline()

responses.forEach(response => {

if (response.status === 429) {

pipeline.incr(`${key}:throttled`)

} else if (response.time > 2000) {

pipeline.incr(`${key}:slow`)

} else {

pipeline.incr(`${key}:success`)

}

})

await pipeline.exec()

// Adjust rate limit based on carrier health

const metrics = await this.redis.hmget(key,

'throttled', 'slow', 'success')

const throttleRate = metrics[0] / (metrics[2] || 1)

const newLimit = throttleRate > 0.1 ?

Math.floor(this.baseLimits[carrier] * 0.8) :

Math.floor(this.baseLimits[carrier] * 1.1)

await this.redis.set(`${carrier}:current_limit`, newLimit, 'EX', 300)

}

}

Different carriers exhibit distinct behavior patterns that adaptive algorithms can learn from:

- FedEx: Often has stricter enforcement during business hours, looser limits at night

- UPS: Provides clear rate limit headers—use these to dynamically adjust

- DHL: Geographic variations in API performance—adapt based on origin/destination

- Regional carriers: Often have inconsistent API performance—more aggressive backoff needed

Platforms like Cargoson, Transporeon, and nShift can leverage these patterns across their entire shipper base, creating a form of collective intelligence about carrier API behavior.

Production Implementation: Choosing Your Coordination Stack

When implementing coordination patterns for carrier rate limiting at scale, your technology choices matter significantly. A typical Redis instance can handle around 100,000-200,000 operations per second depending on the operation complexity. Each one of our rate limit checks requires multiple Redis operations, at minimum an HMGET to fetch state and an HSET to update it. So our single Redis instance can realistically handle maybe 50,000-100,000 rate limit checks per second before becoming the bottleneck.

For large-scale carrier integration platforms, consider these coordination tools:

- Redis with Lua scripts: Redis is perfect for straightforward distributed counting. Best for most carrier integration scenarios

- Bucket4j: Java-based with strong distributed support, good for JVM-based integration platforms

- Gubernator: Gubernator is tailor-made for microservices. Unlike tools that rely on centralized datastores for every request, Gubernator distributes rate-limiting logic across the service mesh. This reduces dependency on a single point of failure and minimizes network overhead. This distributed intelligence model is ideal for addressing scalability challenges in microservices.

Monitor your coordination layer carefully. Key metrics include:

- Redis operation latency (should stay under 1ms p95)

- Lua script execution time

- Hot key detection (watch for carriers that generate high request volumes)

- Coordination failure rates

Set up fallback mechanisms for coordination failures:

async function rateLimitWithFallback(carrier, identifier, limit) {

try {

return await checkDistributedLimit(carrier, identifier, limit)

} catch (redisError) {

// Fallback to local in-memory limiting

return localRateLimiter.check(carrier, identifier, limit * 0.5)

}

}

Notice the fallback uses a reduced limit (50% of the distributed limit). This prevents the system from becoming too permissive when coordination fails while maintaining availability.

Error Budget Allocation and SLA Considerations

In production carrier integration systems, rate limiting coordination becomes part of your overall reliability budget. 47% report $100,000+ remediation costs, 20% exceed $500,000. The cost of coordination failures extends beyond immediate technical impact.

Allocate error budget carefully across your coordination stack:

- 30% for carrier API availability (external dependency)

- 20% for Redis coordination layer availability

- 25% for application server availability

- 25% for network and infrastructure

When coordination patterns prevent race conditions effectively, you can maintain higher overall system reliability. The small latency cost of atomic operations (typically 1-2ms) is justified by the elimination of rate limit violations that can result in API suspensions.

Consider implementing circuit breakers around your coordination layer to prevent cascading failures when Redis becomes unavailable. Modern integration platforms balance availability with consistency, often choosing to degrade gracefully rather than fail completely when coordination systems experience issues.

The patterns explored here—from Lua scripting for atomicity to adaptive algorithms that learn carrier behavior—provide a foundation for building resilient, scalable rate limiting in distributed carrier integration systems. Getting coordination right eliminates race conditions while maintaining the performance characteristics needed for high-throughput shipping operations.