Multi-Tenant Carrier Integration Migration to HTTP/3: Solving Connection Pooling and Observability Challenges Without Breaking Tenant Isolation

DHL's APIs now support HTTP/3. FedEx has experimental QUIC endpoints running. UPS is evaluating QUIC for their tracking services. Your multi-tenant carrier integration middleware, serving 500+ shippers, suddenly faces a migration challenge that goes deeper than switching protocols.

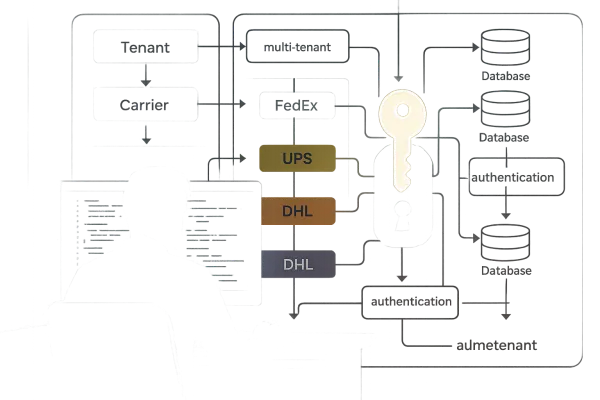

Traditional carrier integration middleware assumes TCP-based connection pooling, where you can easily track which tenant is using which connection to which carrier. Multi-tenant platforms like Cargoson, EasyPost, nShift, and ShipEngine have spent years perfecting per-tenant rate limiting, connection management, and cost allocation based on predictable TCP behavior.

HTTP/3 breaks those assumptions. QUIC uses connection IDs, allowing it to maintain a session even if the IP address changes. Supports mobile network switching without re-establishing connections. Your tenant routing logic, built around stable TCP four-tuples (source IP, source port, destination IP, destination port), stops working when QUIC connections can migrate between networks without warning.

Connection ID Management vs. Traditional Tenant Routing

Here's the specific challenge: TCP connections are identified by a four-tuple: source IP, source port, destination IP, destination port. Change any of these—which happens when your phone switches from Wi-Fi to cellular—and the TCP connection breaks. But QUIC was designed to solve exactly this problem, which creates new complexity for multi-tenant routing.

In traditional HTTP/2 carrier integration, you track tenant boundaries like this:

- Tenant A gets connection pool slots 1-10 to DHL

- Tenant B gets connection pool slots 11-20 to DHL

- Rate limiting happens per-tenant based on connection usage

- Billing tracks data transfer per connection pool

QUIC connection migration destroys this model. Each stream still requires the server to maintain internal structures: data buffers, flow control windows, unique IDs, retransmission timers All that eats up memory and CPU, especially with lots of active streams. When a shipper's mobile device switches networks, the QUIC connection ID changes, but the session persists. Your tenant-aware routing middleware loses track of which tenant owns which connection.

The impact is measurable: connection migration events that were impossible with HTTP/2 now happen 3-4 times per hour on mobile carrier integrations. Your carefully tuned tenant isolation breaks down when connections you can't identify start consuming rate limits meant for other tenants.

Load Balancer Compatibility Matrix for Carrier APIs

Not all load balancers handle UDP-based QUIC traffic properly for multi-tenant carrier routing. Currently, Amazon CloudFront supports HTTP/3, but Application Load Balancer (ALB) does not have HTTP/3 support based on the available information. There is no specific information available about when HTTP/3 support might be added to ALB or if it's in the current roadmap.

Here's what actually works for carrier integration middleware in 2026:

HAProxy Enterprise: It supports high concurrency, advanced retries, and native support for HTTP/3 and gRPC. Production-ready for carrier routing, but requires careful configuration for tenant-aware QUIC connection tracking.

Envoy Proxy: It supports HTTP/1.1, HTTP/2, gRPC, and HTTP/3, along with TCP and TLS passthrough. Strong observability integration, though One reviewer shared, "Envoy gives us complete control over how traffic flows through our platform, and it integrates cleanly into our observability stack." The main challenge is the steep learning curve and complexity when used standalone.

Traefik v3: Traefik v3 brings major performance improvements and support for gRPC, HTTP/3, and native Kubernetes Gateway API. Good for dynamic environments, though less battle-tested for carrier integration workloads.

AWS ALB: No HTTP/3 support. Period. You'll need CloudFront in front of ALB, which adds complexity for tenant routing.

NGINX: HTTP/3 support exists but remains experimental. Not recommended for production carrier integrations where reliability matters.

Observability Blind Spots: Monitoring Encrypted QUIC Streams

Traditional carrier API monitoring breaks completely with QUIC. As QUIC encrypts not only the payload but also most of the packet metadata, it becomes more difficult to troubleshoot network errors and optimize networks for performance and security, which makes the job of network engineers more challenging.

Your existing monitoring setup probably relies on:

- HTTP status codes visible in packet captures

- Request/response correlation through TCP sequence numbers

- Connection state tracking through TCP handshakes

- Per-tenant traffic analysis through connection-level metrics

Because QUIC encrypts most metadata that TCP exposed, traditional network observability tools face challenges. You can't easily see HTTP/3 status codes or request paths by sniffing packets. Monitoring must happen at endpoints—servers, clients, or through standardized logging.

The impact is immediate. When DHL's HTTP/3 endpoint starts returning 5xx errors, your traditional network monitoring can't see the error codes. The encrypted QUIC streams look identical whether they're carrying successful shipment confirmations or rate limit rejections. You lose the ability to correlate tenant behavior with carrier API performance through network-level monitoring.

Synthetic Monitoring Adaptations for QUIC Carrier Endpoints

Traditional TCP-based health checks fail against QUIC-only carrier endpoints. Your synthetic monitoring needs to understand that When QUIC is blocked or fails for any reason, modern browsers and CDNs gracefully fall back to HTTP/2. In fact, part of the genius in h3 deployment lies in this silent fallback model: Browsers try QUIC when Alt-Svc is available and cached. If it fails due to the network, the user never sees an error and h2 picks up the slack.

You need monitoring that can test both protocols and track the fallback behavior. If your synthetic monitor only tests HTTP/2 against a carrier that's started preferring HTTP/3, you're missing performance regressions that only affect QUIC connections.

Tenant Isolation Patterns for UDP-Based Carrier Integration

UDP's connectionless nature requires completely rethinking tenant data segregation. Data isolation is typically achieved through one of three models: shared schema with tenant identifiers, separate schemas per tenant, or separate databases per tenant. Each approach offers different trade-offs in terms of security, performance, and operational complexity, but all aim to ensure that tenants can only access their own data. Robust application logic, access controls, and tenant-aware queries are essential to enforce this separation.

With HTTP/2 over TCP, you could enforce tenant boundaries at the connection level. Each tenant got a dedicated connection pool to each carrier, with clear resource limits and billing boundaries. Since all tenants share the same infrastructure, one tenant's heavy usage can degrade performance for others. This requires careful resource allocation, monitoring, and potentially, the use of microservices and container orchestration (e.g., Kubernetes) to maintain system balance.

QUIC's connection migration breaks this completely. A single QUIC connection can serve multiple streams for multiple tenants, and connection IDs can change mid-session. You need application-level tenant tracking that persists across connection migrations.

Consider two architectural approaches:

Shared UDP sockets with stream-level tenant tracking: One QUIC connection to each carrier, with tenant IDs embedded in stream metadata. More efficient resource usage, but complex isolation guarantees. Risk of tenant data leakage if stream routing fails.

Per-tenant QUIC connections: Separate QUIC connections for each tenant-carrier pair. Simpler isolation model, but Even though HTTP/3 eliminates HOLB at the transport layer, each stream still requires the server to maintain internal structures: data buffers, flow control windows, unique IDs, retransmission timers All that eats up memory and CPU, especially with lots of active streams. Memory usage grows linearly with tenant count.

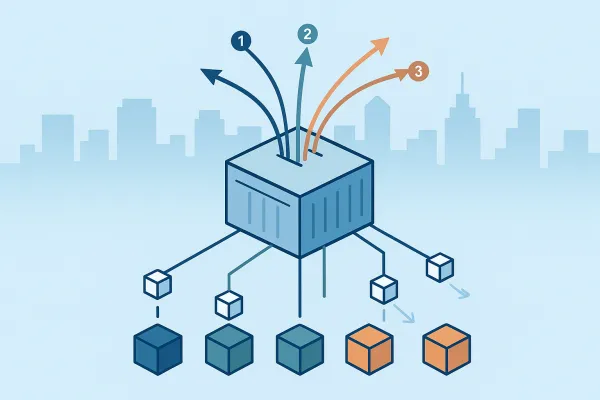

Migration Strategy: Gradual HTTP/3 Rollout Without Service Disruption

The migration path from HTTP/2 to HTTP/3 is designed to be incremental and backward-compatible. You don't need to choose one or the other—deploy HTTP/3 alongside HTTP/2 and HTTP/1.1, and let clients negotiate the best available protocol. Protocol negotiation happens through ALPN (Application-Layer Protocol Negotiation) during the TLS handshake.

For multi-tenant carrier integration middleware, this means running dual protocol stacks during migration. Your architecture needs to support both TCP-based and UDP-based carrier connections simultaneously, with consistent tenant routing and billing across both protocols.

Start with low-volume carriers that have reliable HTTP/3 implementations. CDNs like Cloudflare, Fastly and Akamai now enable HTTP/3 by default. Chrome, Firefox, Safari and Edge support HTTP/3. Test with carriers that provide good fallback behavior when QUIC connections fail.

Implement feature flags at the tenant-carrier level:

- Tenant A → DHL: HTTP/3 enabled

- Tenant A → FedEx: HTTP/2 only (fallback due to connection issues)

- Tenant B → DHL: HTTP/2 only (tenant opted out during testing)

Monitor the impact on key metrics: request latency, connection establishment time, error rates, and tenant isolation effectiveness. From our experience and real-world testing, HTTP/3 provides the greatest impact in: High latency and loss regions: Africa, Southeast Asia remote Latin American cities. Your European shippers might see minimal benefit, while global logistics operations see significant improvements.

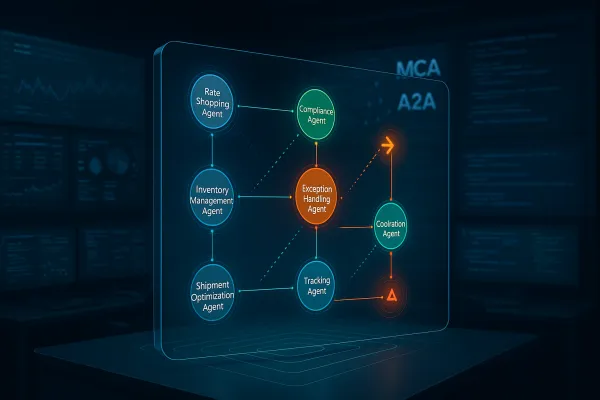

Per-Carrier Protocol Negotiation Logic

Different carriers will migrate to HTTP/3 on different timelines. Your middleware needs dynamic protocol selection that doesn't require code deployments for each carrier upgrade. Store protocol preferences in your configuration layer, with automatic fallback when connections fail.

Implement connection health scoring that tracks both HTTP/2 and HTTP/3 performance per carrier. If DHL's HTTP/3 endpoint shows increased error rates or timeouts, your system should automatically prefer HTTP/2 for reliability while alerting operators to investigate.

The goal isn't perfect HTTP/3 adoption—it's maintaining service reliability while capturing performance benefits where they're available. Some organizations may encounter complex network setups where UDP is deprioritized or blocked for historical reasons. Gradual rollout with careful monitoring helps identify these issues before they affect production traffic. Your enterprise customers might block UDP entirely, making HTTP/3 impossible regardless of carrier support.

Success metrics for HTTP/3 migration should include tenant isolation integrity, not just performance improvements. If HTTP/3 delivers 20% faster label generation but breaks your billing boundaries, the migration is a net loss for business operations.