Production-Ready API Monitoring for Carrier Integration: Detecting Version Changes and Outages Before They Break Shipments

Your carrier API monitoring setup decides whether you catch integration failures before your customers do. Over the past over 4 years, we have collected data on on more than 332 outages that affected ShipEngine API users. You're left unable to calculate shipping rates and customers aren't impressed. You lose out on sales.

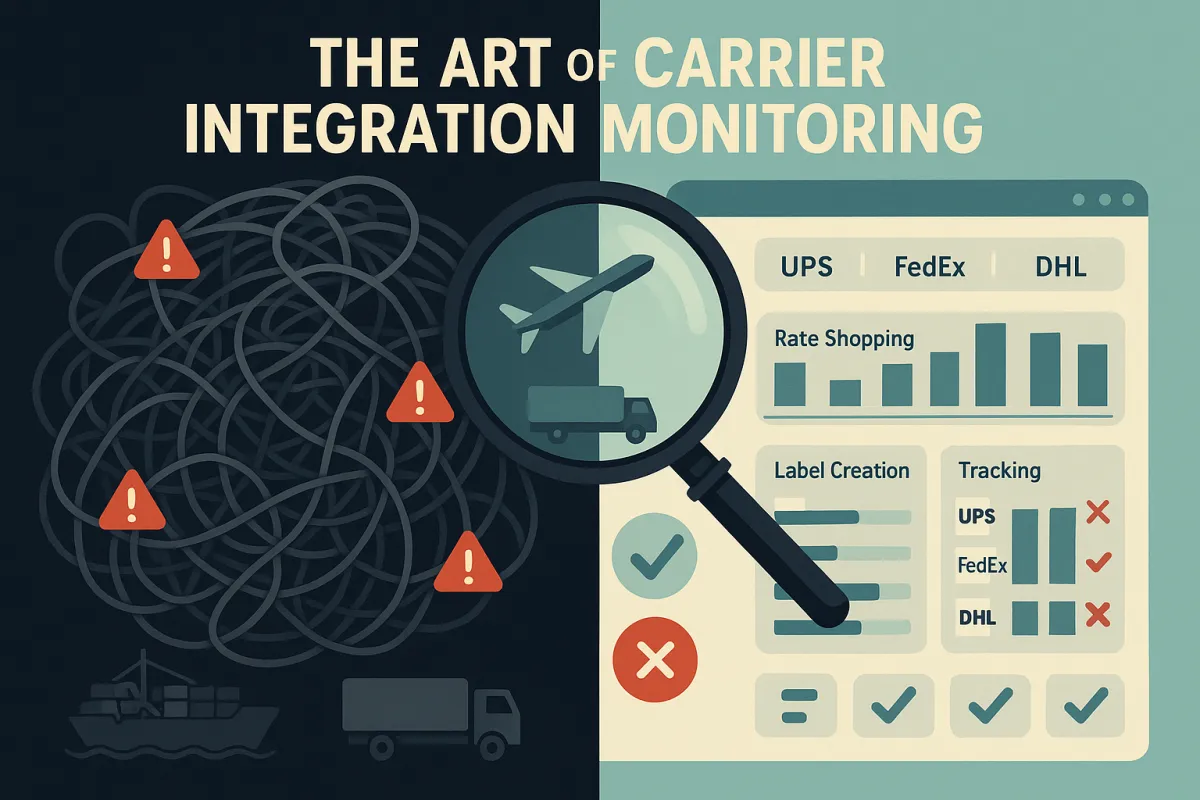

Standard monitoring tools miss the critical patterns unique to carrier APIs. While Datadog might catch your server metrics and New Relic monitors your application performance, neither understands why UPS suddenly started returning 500 errors for rate requests during peak shipping season, or why FedEx's API latency spiked precisely when your Black Friday labels needed processing.

Why Standard API Monitoring Fails for Carrier APIs

Generic monitoring treats all APIs the same. That assumption breaks quickly with carriers. Most global carriers offer some variation of delivery tracking APIs for monitoring parcels, containers, trucks, and truckload movements during multi-modal journeys by air, sea, road, or rail. Different carriers use vastly different protocols - some stick to modern REST APIs, others cling to SOAP XML, and a surprising number still rely on EDI over FTP for certain functions.

It appears their system was sporadically up and down during the outage which is why our tests sometimes connected successfully, resulting in the long list if outages. This intermittent failure pattern appears frequently with carrier APIs. A standard health check might ping an endpoint every minute and report "UP", missing the 30-second windows when actual rate requests fail.

Carrier APIs also have unique failure modes. Rate shopping might work perfectly while label creation fails silently. Tracking updates could be delayed by hours without any HTTP error status. Your generic monitoring won't catch these carrier-specific problems until they've already impacted shipments.

The Hidden Costs of Carrier API Downtime

No live rates mean customers won't be able to see when packages will be delivered, or compare methods against rates. Without the ability to clearly understand what shipping methods are available at what cost, and when packages will be delivered, customers are more likely to abandon their carts.

The real cost extends beyond immediate cart abandonment. Manual rate shopping during outages ties up customer service teams. Delayed label creation pushes shipments to the next business day. Failed tracking updates generate support calls from worried customers. Each minute of carrier API downtime creates a cascade of operational overhead that multiplies across your entire shipping operation.

Architecture Patterns for Carrier-Specific Monitoring

Building effective carrier API monitoring requires understanding how carriers behave differently from typical web APIs. Here's a reference architecture that accounts for these patterns:

┌─────────────────┐ ┌──────────────────┐ ┌─────────────────┐

│ Rate Shopping │ │ Label Creation │ │ Tracking │

│ Monitor │ │ Monitor │ │ Monitor │

└─────────────────┘ └──────────────────┘ └─────────────────┘

│ │ │

└────────────────────────┼────────────────────────┘

│

┌─────────────▼─────────────┐

│ Carrier Health Engine │

│ • Per-carrier baselines │

│ • Context-aware alerts │

│ • Schema validation │

└─────────────┬─────────────┘

│

┌─────────────▼─────────────┐

│ Multi-tenant Router │

│ • Tenant isolation │

│ • Weighted routing │

│ • Circuit breakers │

└─────────────┬─────────────┘

│

┌───────────────────┼───────────────────┐

│ │ │

┌─────────▼─────────┐ ┌──────▼──────┐ ┌─────────▼─────────┐

│ UPS/FedEx │ │ DHL │ │ Regional │

│ REST APIs │ │ SOAP/XML │ │ Carriers │

└───────────────────┘ └─────────────┘ └───────────────────┘

This architecture separates concerns by function (rate shopping, labelling, tracking) rather than by carrier. Each monitor understands the specific response patterns and failure modes for its function across all carriers. The Carrier Health Engine maintains baseline performance profiles for each carrier and can detect when UPS response times suddenly jump or when DHL starts returning malformed XML.

Shipping API monitors are beneficial for enterprise-grade organisations as these organisations have independently negotiated SLA terms with their logistics partners regarding the downtime of the API. API monitoring systems allow these organisations to track where the APIs perform as expected. The monitoring needs to track these negotiated SLAs per carrier, not generic uptime metrics.

Multi-Tenant Monitoring Boundaries

Most carrier integration platforms serve multiple shippers. Your monitoring architecture must isolate performance data and alerting per tenant while efficiently sharing carrier connections. Tenant A shouldn't receive alerts about Tenant B's failed rate requests, but both need to know if UPS is experiencing a system-wide outage.

Design tenant-specific dashboards that show only relevant carrier performance. A tenant shipping exclusively within the EU doesn't need alerts about USPS domestic service issues. Use tenant-specific error budgets - a high-volume shipper might have tighter SLAs than occasional users. This segmentation helps carriers like Cargoson, nShift, and EasyPost manage diverse customer expectations without alert noise.

Detecting API Version Changes Before They Break

Version Compatibility Problems: API changes often break integrations. Proactive monitoring turns potential surprises into planned maintenance. However, the API dependency does make applications more vulnerable to change — one small change to an API can break an entire app. API changes are inevitable.

Carrier APIs evolve constantly. FedEx might deprecate a rate shopping endpoint, UPS could change their authentication requirements, or DHL might introduce new required fields for international shipments. When third-party APIs change without warning, existing integrations break, potentially halting critical business workflows.

Schema drift detection is your first line of defence. Monitor API responses for unexpected field additions, removals, or type changes. A new required field in UPS's address validation response will break your integration before you know it exists. Set up automated contract tests that validate not just response status codes but the actual data structure.

Parse deprecation headers and warning messages from carrier responses. Many carriers include `Sunset` headers or custom warning fields months before removing endpoints. Create automated workflows that flag these warnings and create engineering tickets. Don't wait for the "surprise" email that a critical API version will be retired in two weeks.

Automated Contract Testing Pipelines

Traditional API monitoring pings endpoints and checks status codes. Contract testing validates the API actually returns usable data in the expected format. Run these tests continuously, not just during deployments.

For rate shopping APIs, validate that returned rates include all required fields: service type, cost, delivery date, and any carrier-specific identifiers your system needs. For tracking APIs, verify event timestamps are properly formatted and location data includes postal codes. These detailed validations catch breaking changes that simple ping tests miss.

Modern platforms like ShipEngine, Cargoson, and nShift build contract testing into their integration pipelines. When DHL introduces a new required field for European shipments, the contract tests fail immediately, triggering an engineering review before customer shipments are affected.

Real-Time Health Scoring and Alerting

API monitoring can help address these kinds of issues by: Detecting and alerting on performance thresholds and API availability before they impact users and SLAs or SLOs. But carrier APIs need scoring that accounts for business impact, not just technical metrics.

Design weighted health scores that reflect your actual usage patterns. If 80% of your volume goes through UPS Ground service, weight UPS Ground performance heavily in your overall health score. A five-minute outage in UPS Next Day Air might barely register, while the same outage in UPS Ground creates immediate business impact.

Build carrier-specific performance baselines. UPS typically responds to rate requests in 200-400ms during business hours, but spikes to 800ms during peak season. DHL's SOAP API normally takes 800-1200ms but can stretch to 3 seconds during European peak hours. Alert when performance degrades beyond these carrier-specific normals, not arbitrary thresholds.

Consider regional differences in your scoring. A carrier might perform excellently for domestic shipments while struggling with international rates. Your health scoring should reflect these nuances - Manhattan Active, Blue Yonder, and Cargoson all implement regional performance tracking for this reason.

SLO-Based Alerting for Carrier Performance

You'll use them to meet the business's expectations by identifying an SLI metric that is understandable by both technical and non-technical stakeholders. Then you will set a target (an SLO) in the form of a simple percentage that shows you how often you're meeting your speed and quality obligations.

Move beyond simple uptime percentages to business-relevant SLOs. Instead of "UPS API is 99.9% available," track "Rate shopping completes successfully within 2 seconds for 95% of requests." This SLO directly relates to customer experience during checkout.

Nobl9 sends alerts when an error budget has been exceeded or is at risk. Since Nobl9 calculates error budgets of acceptable thresholds, it can trigger workflows and alerts when the error burn rate is too high or has been exceeded. Apply this approach to carrier performance. Set monthly error budgets for each carrier based on your business requirements. A high-volume shipper might need 99.95% successful rate shopping, while occasional shippers can accept 99.5%.

Track burn rate, not just absolute errors. If your monthly error budget allows 100 failed requests, but 50 failures happen in the first week, you're burning budget too quickly. Alert on these trends before you exhaust your error budget and breach customer SLAs.

Observability Data Pipeline Design

The Shipping API monitor uses the request-response module to track and monitor the performance of the APIs. The system then confirms and checks it against the acceptable limits set in the Shipper's API documentation. But storing and analyzing this monitoring data requires thoughtful pipeline architecture.

Structure your observability data for both real-time alerting and historical analysis. Use consistent field naming across carriers - normalize UPS's "ResponseTime" and FedEx's "ProcessingDuration" into a standard "api_duration_ms" field. This consistency enables cross-carrier performance comparisons and simplifies alerting logic.

Include business context in your telemetry data. Tag requests with tenant ID, shipping service level, and package characteristics. When diagnosing a performance issue, you need to know if slow responses affect only international shipments or if the problem spans all service types. Context-rich data accelerates troubleshooting and enables more precise alerting.

Design for compliance requirements from day one. Transportation and logistics often face regulatory auditing. Structure logs to support these requirements without impacting runtime performance. Many TMS vendors including MercuryGate, Descartes, and Transporeon build compliance-ready logging into their carrier integration layers.

Cost-Effective Log Retention for Compliance

Shipping data has long retention requirements but highly variable access patterns. Recent performance data needs millisecond query response for alerting. Month-old tracking events might only be accessed during customer disputes or compliance audits.

Implement tiered storage that matches access patterns. Keep the last 7 days of detailed API logs in high-performance storage for real-time analysis. Move 30-90 day data to medium-cost storage for troubleshooting and trend analysis. Archive older data to low-cost storage with slower retrieval times for compliance purposes.

Automate this lifecycle management. Manual data archiving creates operational overhead and increases the risk of accidentally deleting compliance-required data. Cloud platforms offer automated tiering, but ensure your archiving strategy aligns with transportation industry regulations and your specific carrier agreements.

The investment in production-ready API monitoring pays dividends when carriers make unannounced changes or experience unexpected outages. Your monitoring system becomes the early warning system that keeps shipments flowing while competitors scramble to understand why their integrations suddenly stopped working.

Start with monitoring your highest-volume carrier endpoints first. Implement schema validation and baseline performance tracking. Add SLO-based alerting that reflects business impact rather than arbitrary technical thresholds. Your customers will notice the difference when their shipments keep moving despite carrier API turbulence.