Tenant-Aware Routing in Carrier Integration Middleware: Preventing Data Leakage at Scale

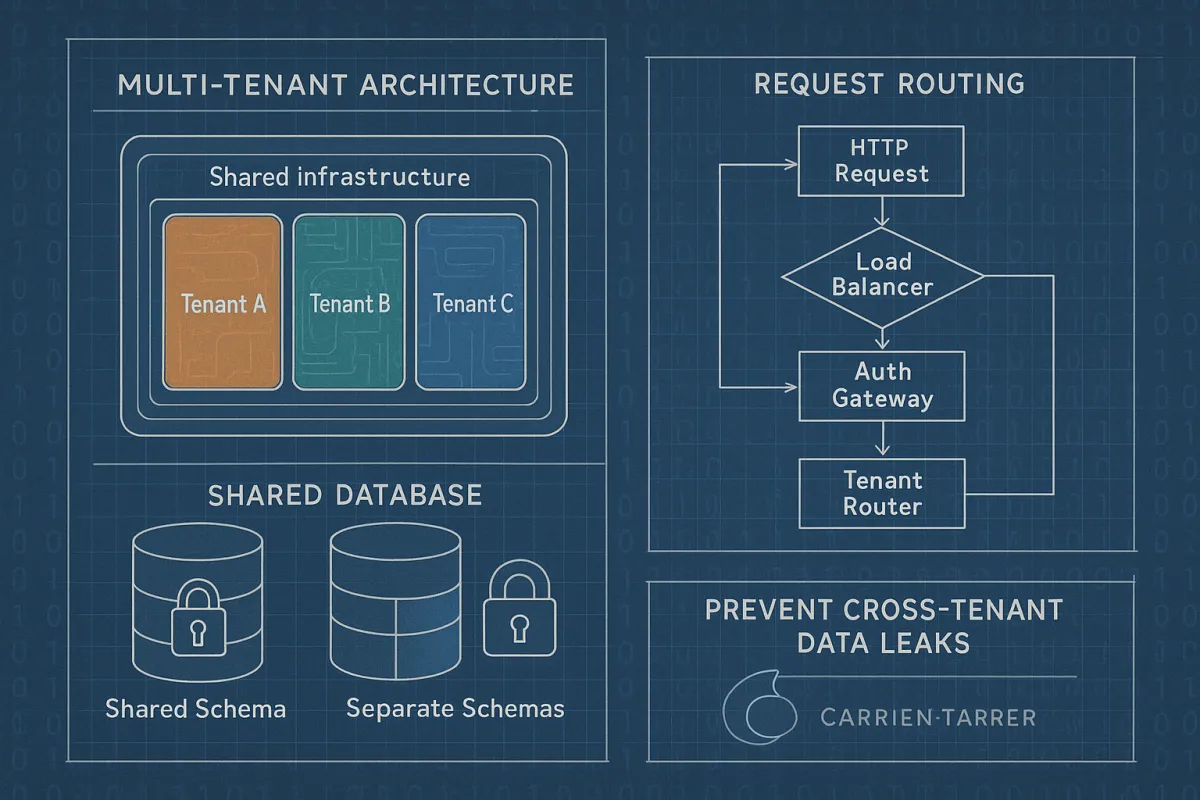

Carrier integration middleware for multi-tenancy needs more than shared database patterns and token-based routing. When tenant service APIs become overloaded, they impact other APIs and dashboards because Tenant Service is part of API request authorization logic, as Cloudflare discovered during their September 2025 outage. If tenant isolation is not properly enforced, there's a risk of unauthorised access or data leakage between tenants, making request-level tenant context enforcement crucial for carrier platforms handling shipping data across thousands of customers.

The Tenant Isolation Challenge in Carrier Integration

Multi-tenant carrier integration platforms face unique challenges beyond typical SaaS applications. Each tenant's shipping data contains competitive intelligence: rate negotiations with carriers, shipping volumes, customer delivery addresses, and supply chain patterns. Unlike CRM or accounting software where data breaches cause privacy concerns, shipping platform breaches expose business strategy.

Since all tenants share the same infrastructure, one tenant's heavy usage can degrade performance for others. This requires careful resource allocation, monitoring, and potentially, the use of microservices and container orchestration to maintain system balance during peak shipping seasons. During Black Friday, a single large retailer can generate 10x their normal label volume, overwhelming shared rate-shopping APIs and causing timeouts for smaller tenants.

Platforms like Cargoson, nShift, and EasyPost each handle tenant isolation differently. Developers appreciate the clean API and documentation, competitive pricing, and broad courier network access. However, reviews consistently highlight customer support issues, with multiple users noting limited support hours and difficulty reaching human representatives from EasyPost, suggesting their multi-tenant architecture may sacrifice tenant-specific operational support for cost efficiency.

Request Routing Patterns: From Headers to Context

Effective tenant-aware routing starts at the edge. Most carrier integration platforms use header-based tenant identification, but this approach creates security vulnerabilities when headers can be spoofed or manipulated.

Here's how request flow works through typical middleware layers:

HTTP Request -> Load Balancer -> Auth Gateway -> Tenant Router -> Service Layer

| | | |

| | | v

| | | PostgreSQL

| | | SET search_path = 'tenant_123'

| | |

| | v

| | Tenant Context Injection

| | {tenantId: "123", tier: "premium"}

| |

| v

| JWT Token Validation

| Extract tenant claim

|

v

X-Tenant-ID: 123 (untrusted)

Token-based identification proves more secure. The authentication layer validates the JWT and extracts the tenant ID from cryptographically signed claims, preventing tenant ID tampering. This context gets injected into all downstream service calls as structured metadata.

Database Schema Separation vs Shared Tables

The main design patterns are: Shared Database, Shared Schema: All tenants share the same database and schema. Simple and cost-effective, but poor data isolation and limited customization. Shared Database, Separate Schemas: All tenants share a database, but each has its own schema for better isolation while maintaining operational simplicity.

For carrier integration specifically, schema-per-tenant works well for PostgreSQL deployments. Each tenant gets a dedicated schema with identical table structures, and the middleware modifies the connection's search_path dynamically:

SET search_path = 'tenant_xyz';

This approach provides strong logical separation while allowing shared connection pools and simplified backup procedures. When starting your greenfield project, the Database per Tenant model should only be chosen if your business demands strict regulatory compliance in day 1, though many carrier platforms eventually migrate to separate databases per major tenant for performance isolation.

Preventing Cross-Tenant Data Leaks

The September 2025 Cloudflare outage demonstrates how tenant service failures cascade. This bug caused repeated, unnecessary calls to the Tenant Service API. The API calls were managed by a React useEffect hook, but we mistakenly included a problematic object in its dependency array. Because this object was recreated on every state or prop change, React treated it as "always new," causing the useEffect to re-run each time. This created a feedback loop that overwhelmed their tenant service.

Carrier platforms need defense-in-depth tenant isolation. Database-level tenant filtering must be enforced at the ORM level, not just in application queries. Each SQL query should automatically include tenant ID predicates, even when developers forget to add them:

SELECT * FROM shipments WHERE tenant_id = $current_tenant AND tracking_number = 'ABC123';

Network policies provide another isolation layer. Kubernetes NetworkPolicies can restrict pod-to-pod communication based on tenant labels, preventing cross-tenant API calls even during application bugs.

Middleware Stack Implementation

Tenant context must be immutable once established. Create a TenantContext object that includes not just the ID, but also tier information, feature flags, and rate limits:

type TenantContext struct {

ID string

Tier string

RateLimit int

Features map[string]bool

DatabaseURL string

}

This context gets propagated through all service calls using request headers or gRPC metadata. When tenant context is missing, services should fail closed, returning 403 errors rather than operating without tenant boundaries.

Performance Considerations Under Load

Since all tenants share the same infrastructure, one tenant's heavy usage can degrade performance for others. The "noisy neighbor" problem hits carrier platforms hard during shipping peaks. A major retailer processing 100,000 labels per hour can saturate rate-shopping APIs that smaller tenants depend on.

Token bucket algorithms provide tenant-specific rate limiting. Each tenant gets individual buckets for different operations: rate shopping (100 requests/minute), label generation (1,000/hour), tracking queries (500/minute). Premium tiers get larger buckets and faster refill rates.

Platforms like ShipEngine and Cargoson implement different approaches. Offer merchants the very best discounted rates from across the globe without the need to negotiate with carriers. Whether your merchants have their own existing carrier contracts or you want to provide them with our pre-negotiated discounted carrier rates, the choice is yours. This flexibility requires complex rate management per tenant.

Observability for Multi-Tenant Systems

Standard monitoring tools often fail in multi-tenant environments. You need tenant-scoped metrics that can identify which tenant is causing performance degradation and whether it's intentional heavy usage or a runaway process.

The architectural patterns examined in this context—comprising CI/CD-initiated application configuration methods, dynamic routing logic execution, and automated tenant provisioning frameworks—collectively redefine multi-tenant management approaches. By effectively applying declarative configuration management, GitOps methodologies, and infrastructure as code principles, technical teams can create zero-touch tenant operations while maintaining visibility into per-tenant resource consumption.

Each API request should generate metrics tagged with tenant ID, request type, and response time. Alert on tenant-specific anomalies: if Tenant XYZ normally generates 100 rate requests per minute but suddenly jumps to 10,000, that suggests either a integration bug or intentional abuse.

Deployment Patterns and Operational Concerns

Multi-tenant carrier platforms need zero-downtime deployments with tenant awareness. Blue-green deployments work, but you need canary releases per tenant tier. Premium tenants get new features first in controlled rollouts, while basic tier tenants receive updates after validation.

By effectively applying declarative configuration management, GitOps methodologies, and infrastructure as code principles, technical teams can create zero-touch tenant operations that maintain total isolation while facilitating quick scaling abilities. These integrated strategies effectively bridge the historical gap between shared infrastructure efficiency and dedicated tenant security requirements.

Tenant onboarding should be completely automated. When a new customer signs up, the system needs to provision database schemas, configure rate limits, create monitoring dashboards, and establish carrier connections without manual intervention. Platforms like nShift and Cargoson that serve enterprise customers need this automation to scale efficiently.

Feature flags become crucial for tenant management. Different customers may need different carrier integrations, API versions, or business rules. The platform needs to activate features per tenant without code deployments, allowing sales teams to enable premium features immediately after contract signature.

Configuration drift between tenants creates operational complexity. Use infrastructure as code to ensure consistent tenant environments while allowing necessary customizations. Each tenant's configuration should be version controlled and reproducible, preventing the "works in development, breaks in production" problems that plague manually configured systems.

The goal is reliable, scalable tenant management that prevents data leakage while maintaining the operational efficiency that makes multi-tenant platforms economically viable. When tenant isolation works correctly, customers should never know they're sharing infrastructure with competitors.