Webhook Retry Patterns for Carrier Integration: Building Resilient Event Processing at Scale

Carrier webhooks fail at rates that would bankrupt a traditional SaaS platform. The business costs of webhook failures can be substantial, including lost sales, customer dissatisfaction, regulatory penalties, and operational disruption costs, and in carrier integrations, these failures happen far more frequently than other domains.

The numbers tell a harsh story. An ideal retry rate should be less than 5% for most webhook systems, but carrier integration platforms routinely see retry rates above 20%. Why? Carrier APIs suffer from endemic reliability issues that compound webhook delivery challenges.

Ocean carriers like Maersk and MSC provide APIs with network issues or consumer service downtimes that persist for hours. LTL carriers frequently return 5xx errors during peak shipping periods when their systems buckle under volume. Last-mile delivery APIs from FedEx and UPS experience maintenance windows that can span entire nights, leaving webhook deliveries queued and aging.

Each failure cascades through your platform. When DHL's tracking API returns stale data for six hours, your webhook retry system hammers their endpoints, burning through your retry budget while delivering worthless notifications to downstream systems. Meanwhile, genuine status updates from functioning carriers get delayed behind the failed attempts, creating a traffic jam that affects all your tenants.

Multi-Tenant Failure Isolation

The core architectural challenge in carrier integration webhook systems isn't managing failures—it's preventing one tenant's failures from destroying everyone else's experience. Sharing the same messaging infrastructure across multiple tenants could expose the entire solution to the Noisy Neighbor issue. The activity of one tenant could harm other tenants, in terms of performance and operability.

Consider this scenario: Tenant A processes 50,000 shipments daily through a problematic ocean carrier whose API returns timeouts 40% of the time. Without proper isolation, their failed webhook retries consume queue capacity and worker threads that should serve Tenant B's 500 daily parcel shipments. Tenant B's customers start complaining about delayed notifications while Tenant A's massive retry storms continue.

Effective isolation requires tenant-aware queue partitioning. We aim to achieve fairness without relying on ad-hoc logic in the conversion-service, and this can be done with the concept of sharded queue. Behind the scenes, this queue corresponds to multiple shards that can be seen as different queues, one per tenant. When the conversion-service dequeues a job from the conversions-to-be-done-queue, it is actually dequeuing a job from one of the shards in a round-robin fashion.

For carrier webhooks, this translates to dedicated retry queues per tenant with separate backoff policies. When UPS's API starts timing out, only the affected tenant's webhook deliveries slow down. Other tenants continue receiving real-time notifications for DHL and FedEx shipments.

Circuit breaker patterns become essential at the tenant level. Each tenant should have individual circuit breakers for each carrier, preventing cascading failures across the platform. When a tenant's Maersk integration fails repeatedly, their circuit breaker opens, but other tenants' Maersk webhooks continue processing normally.

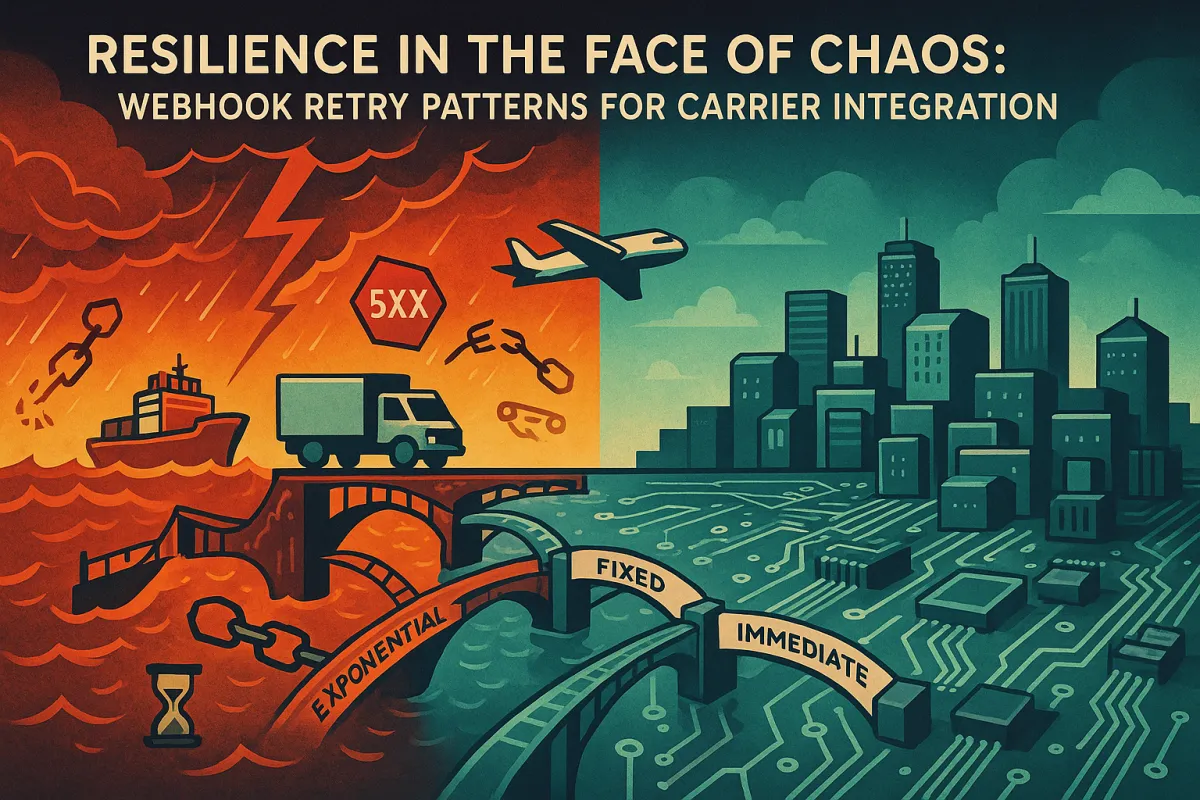

Exponential Backoff Strategies for Carrier Webhooks

Standard exponential backoff assumes transient network glitches—brief 30-second outages that resolve quickly. Carrier APIs break this assumption spectacularly. When Hapag-Lloyd's API goes down for planned maintenance, it stays down for 4-6 hours. One proven strategy to handle retries is exponential backoff. If it fails the second time, you wait a bit longer, and the cycle continues. Just using exponential backoff, however, might lead to a thundering herd problem where a lot of webhook events are retried simultaneously.

Traditional backoff formulas like 2^n seconds create problems in carrier integrations. After 10 attempts, you're waiting over 17 minutes between retries. But carrier outages often last multiple hours, making this approach either too aggressive (wasting resources on known-broken endpoints) or too conservative (missing the narrow window when services recover).

Jittered backoff becomes critical in carrier webhook systems. Jitter is basically a fancy term for adding a bit of randomness to your retry intervals to spread out the load. It prevents all of those webhooks from hammering the server at the same moment. When a major carrier like COSCO comes back online after maintenance, thousands of accumulated webhook retries could fire simultaneously without jitter, immediately overwhelming the recovered service.

A production-tested approach for carrier webhooks:

- Attempt 1: Immediate retry (network glitch recovery)

- Attempts 2-4: 1-5 minute intervals with ±30% jitter

- Attempts 5-8: 15-30 minute intervals with ±50% jitter

- Attempts 9+: 1-2 hour intervals with ±60% jitter

This pattern acknowledges that carrier API failures cluster around maintenance windows (usually 2-6 AM local time) and system overload during peak shipping periods (Monday mornings, holiday seasons).

Carrier-Aware Retry Logic

Not all carrier APIs fail the same way. UPS typically experiences short, sharp outages during system updates—30 minutes of complete unavailability followed by normal operation. DHL tends toward gradual degradation—response times climbing from 200ms to 30 seconds over several hours before partial recovery.

Ocean carriers follow different patterns entirely. Maersk's API might return stale data for hours while appearing technically available (200 status codes with 6-hour-old information). OOCL frequently returns 502 errors during European business hours due to capacity constraints.

Effective retry logic adapts to these patterns:

- UPS/FedEx pattern: Short, aggressive retries followed by longer delays

- DHL pattern: Gradual backoff with timeout adjustments

- Ocean carrier pattern: Data freshness validation with extended retry windows

Consider implementing carrier-specific retry policies in your webhook architecture. When UPS returns 503 errors, use rapid-fire retries for 10 minutes, then switch to hourly attempts. When Maersk returns data with timestamps older than 2 hours, treat it as a failure and extend retry intervals to match their typical data update cycles.

Dead Letter Queue Architecture for Webhook Processing

Even the most sophisticated retry strategy eventually admits defeat. There comes a time when you have to accept that a webhook is just not getting through. After a certain number of retry attempts, it's probably best to move the event to a Dead Letter Queue. This queue stores the events that couldn't be delivered, so you can inspect them later.

In carrier integration systems, dead letter queues serve a dual purpose: they prevent infinite retry loops and provide audit trails for compliance requirements. When a webhook carrying customs clearance notifications fails repeatedly, you need that data preserved for potential regulatory inquiries.

DLQ architecture for carrier webhooks requires careful categorisation:

- Temporary failures: Carrier API downtime, network timeouts

- Permanent failures: Invalid webhook URLs, authentication issues

- Business logic failures: Malformed payloads, validation errors

- Compliance failures: Messages requiring manual intervention

For webhooks or events that exhaust all retry attempts, dead letter queues (DLQs) provide a safety net. Instead of endlessly retrying, failed events are moved to a dedicated queue for manual review and troubleshooting. DLQs serve as both a storage solution and a diagnostic tool. For example, repeated failures from the same endpoint might signal a deeper issue requiring investigation.

A robust DLQ implementation includes automated alerting when certain thresholds are exceeded. If more than 100 webhooks from the same carrier end up in your DLQ within an hour, that likely indicates a systemic issue requiring immediate attention rather than individual message problems.

Observability and Alerting for Webhook Failures

Monitoring carrier webhook retry patterns reveals insights invisible in traditional web traffic. This metric provides insight into the reliability of your webhook delivery system. Keep track of the rate of failed webhook deliveries and the types of errors encountered. Understanding error patterns helps in identifying and resolving issues promptly.

Critical metrics for carrier webhook observability:

- Per-carrier success rates: Maersk at 72%, UPS at 94%, DHL at 87%

- Retry rate by tenant: Identify which tenants are affected by carrier issues

- Queue depth trends: Detect carrier outages before they impact service

- Delivery latency distribution: 95th percentile should stay below 30 minutes

Alert thresholds must account for carrier reliability baselines. A 10% failure rate might trigger immediate escalation for a payment processor webhook, but it represents a good day for some ocean carriers.

Time-based alerting provides crucial context. If webhook failures spike during known carrier maintenance windows (typically announced 48-72 hours in advance), suppress alerts and increase retry intervals automatically. When failures occur outside maintenance windows, escalate immediately.

Implementation Patterns and Trade-offs

Production carrier integration webhook systems require architectural decisions that balance reliability, cost, and complexity. The patterns that work for typical SaaS webhooks often crumble under the unique pressures of carrier APIs.

Queue depth management becomes critical when dealing with carrier outages. If you're using a message queue system, track the length of the message queue. During a 6-hour Maersk API outage, webhook retry messages can accumulate into millions of backlogged items. Your infrastructure costs spike as queue storage expands, but purging messages risks losing critical shipment updates.

Consider implementing tiered storage for aging webhook retries. Messages less than 1 hour old stay in fast, expensive queues. Messages 1-24 hours old move to medium-speed storage. Messages older than 24 hours migrate to cheap, slow storage with reduced retry frequencies.

Rate limiting requires nuanced implementation in carrier systems. Enforce rate limits to prevent abuse and ensure fair usage of your webhook system. For example, in a multi-tenant SaaS application, limit a tenant to 1M events per day. Anything beyond this should be throttled and deferred. But carrier APIs have their own rate limits—often undocumented and inconsistent.

Popular carrier middleware solutions handle these challenges differently. Platforms like Cargoson, nShift, and EasyPost each implement distinct approaches to webhook retry patterns. ShipStation focuses on aggressive retries for parcel carriers, while ShipEngine emphasises longer retry windows for ocean freight. Understanding these differences helps inform your architectural choices.

Testing Webhook Retry Resilience

Traditional webhook testing involves mocking a few HTTP error codes and validating retry behaviour. Carrier integration testing requires simulating the complex failure modes that define the shipping industry.

Chaos engineering for carrier webhook systems should include:

- Sustained outages: 4-8 hour carrier API downtime

- Partial degradation: 30-second response times with 50% timeout rates

- Data staleness: APIs returning hours-old information

- Rate limit exhaustion: Sudden API quota violations during peak periods

Load testing must account for retry storms. When testing with 10,000 webhook deliveries, assume 20-30% will require retries. Model the compound load this creates on your infrastructure over 24-48 hour periods.

Consider implementing shadow traffic testing with real carrier API responses. Record successful webhook deliveries and replay them against test endpoints to validate retry logic without risking production webhook failures.

Performance and Cost Optimization

The economics of carrier webhook retry systems differ fundamentally from other webhook domains. Implementing comprehensive retry mechanisms, redundant delivery paths, and sophisticated monitoring systems requires significant infrastructure and development investment. However, the business costs of webhook failures can be substantial, including lost sales, customer dissatisfaction, regulatory penalties, and operational disruption costs.

Cost optimisation starts with accepting that not all webhooks deserve equal retry investment. A customs clearance notification for a $50,000 electronics shipment justifies aggressive retry attempts across multiple hours. A delivery exception for a $15 document envelope might not warrant the same resources.

Implement webhook priority tiers based on shipment value, customer SLA requirements, and message criticality. High-priority webhooks get immediate retries and premium queue processing. Low-priority webhooks use longer retry intervals and cheaper storage options.

Queue architecture decisions significantly impact costs. AWS SQS with dead letter queues might cost $50/month for a single-tenant system but $5,000/month for a multi-tenant platform handling millions of carrier webhooks. Apache Kafka requires more operational overhead but provides better cost scaling for high-volume scenarios.

Consider geographical distribution of retry processing. If most carrier APIs are hosted in European data centres, running retry workers in the same regions reduces latency and improves success rates. The infrastructure cost increase often pays for itself through reduced retry attempts.

Monitor the true cost of webhook retries across your platform. Include compute time, storage costs, carrier API consumption charges, and operational overhead. Platforms like Cargoson and others in the carrier middleware space often see retry-related costs consume 20-40% of their infrastructure budget—significantly higher than typical webhook systems.

Optimise retry policies based on historical data. If Hapag-Lloyd's API fails consistently between 2-4 AM GMT during maintenance, automatically increase retry intervals during those windows. If OOCL responds faster to webhook deliveries from Asia-Pacific regions, route retries through those data centres.

Remember that webhook reliability in carrier integration isn't just a technical challenge—it's a competitive advantage. Platforms that consistently deliver critical shipping notifications while competitors struggle with carrier API instability win customers and command premium pricing. The infrastructure investment in robust retry systems pays dividends in customer retention and market positioning.